Detecting Human-Object Contact in Images

Yixin Chen1*, Sai Kumar Dwivedi2, Michael J. Black2, and Dimitrios Tzionas3

1 Beijing Institute of General Artificial Intelligence, 2 Max Planck Institute for Intelligent Systems, 3 University of Amsterdam * Work done while interning at MPI-IS2

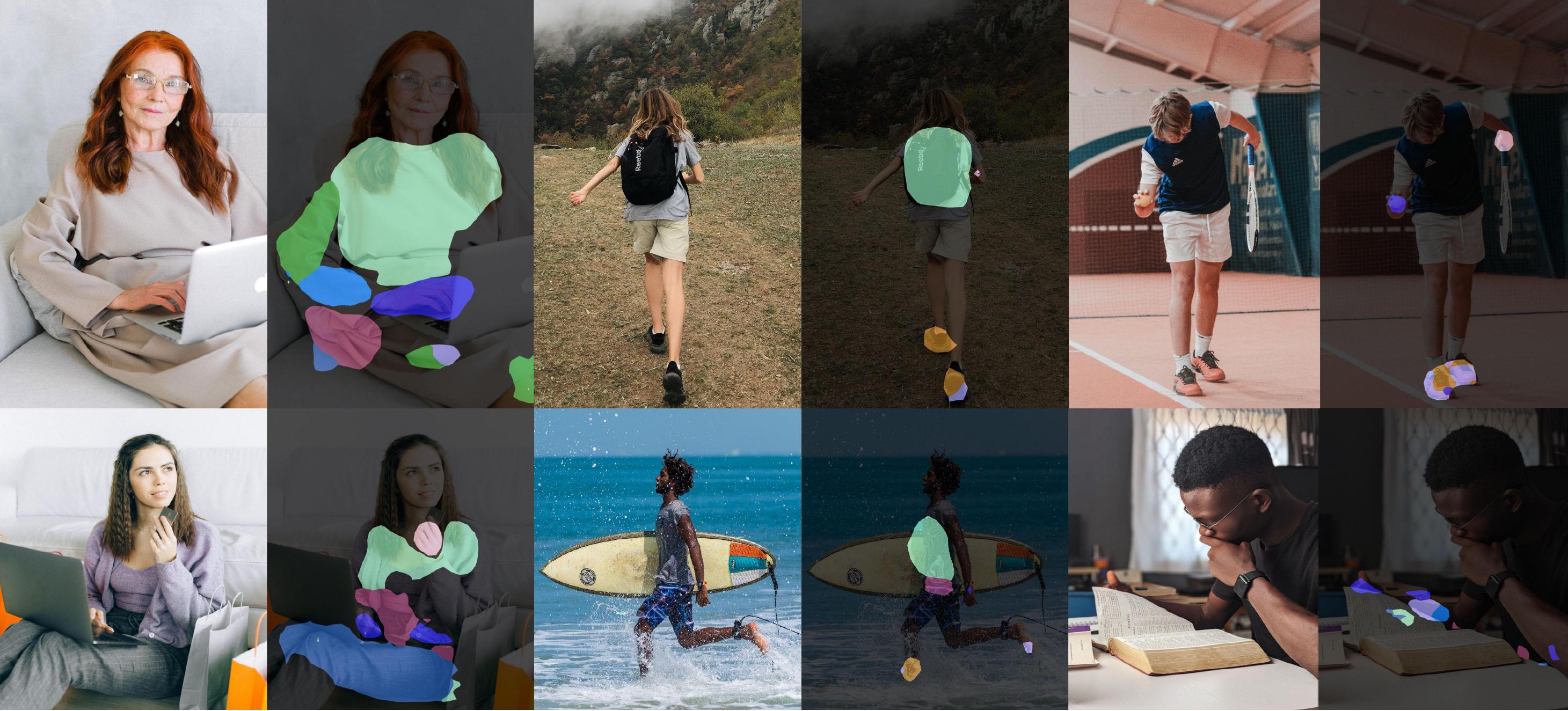

Our contact detector, trained on HOT (“Human-Object conTact”) dataset, estimates contact between humans and scenes from an image taken in the wild. Contact is important for interacting humans, yet, standard in-the-wild datasets unfortunately lack such information. Our contact dataset and detector are a step towards providing this in the wild. Images from pexels.com.

Humans constantly contact objects to move and perform tasks. Thus, detecting human-object contact is important for building human-centered artificial intelligence. However, there exists no robust method to detect contact between the body and the scene from an image, and there exists no dataset to learn such a detector. We fill this gap with HOT (“Human-Object conTact”), a new dataset of human-object contacts in images. To build HOT, we use two data sources: (1) We use the PROX dataset of 3D human meshes moving in 3D scenes, and automatically annotate 2D image areas for contact via 3D mesh proximity and projection. (2) We use the V-COCO, HAKE and Watch-n-Patch datasets, and ask trained annotators to draw polygons around the 2D image areas where contact takes place. We also annotate the involved body part of the human body. We use our HOT dataset to train a new contact detector, which takes a single color image as input, and outputs 2D contact heatmaps as well as the body-part labels that are in contact. This is a new and challenging task, that extends current foot-ground or hand-object contact detectors to the full generality of the whole body. The detector uses a part-attention branch to guide contact estimation through the context of the surrounding body parts and scene. We evaluate our detector extensively, and quantitative results show that our model outperforms baselines, and that all components contribute to better performance. Results on images from an online repository show reasonable detections and generalizability.

News

11 September 2023

New We release the instance-level contact annotation for HOT v1.0. Check out the download page and attached code.

19 April 2023

We have released the following:

- Our code on Github (see links below)

- The HOT v1.0 Dataset, including HOT-Annotated set and HOT-Generated set.

- Our model checkpoint for our paper in CVPR23.

Publication

Data and Code

To access the HOT data and checkpoints, click on the "Sign In" button on the top-right corner. If you don't already have an account, you will have to later click on "Register"; please follow the instructions and agree with our license. Please opt-in for communication so that you can receive emails about the official release and any potential updates.

Acknowledgments

We thank:

- Chun-Hao Paul Huang for his valuable help with the RICH dataset and BSTRO detector's training code.

- Lea Müller, Mohamed Hassan, Muhammed Kocabas, Shashank Tripathi, and Hongwei Yi for insightful discussions.

- Benjamin Pellkofer for website design, IT, and web support.

- Nicole Overbaugh and Johanna Werminghausen for the administrative help.

This work was supported by the German Federal Ministry of Education and Research (BMBF): Tübingen AI Center, FKZ: 01IS18039B.

BibTeX

@inproceedings{chen2023hot,

title = {Detecting Human-Object Contact in Images},

author = {Chen, Yixin and Dwivedi, Sai Kumar and Black, Michael J. and Tzionas, Dimitrios},

booktitle = {{Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)}},

year = {2023},

url = {https://hot.is.tue.mpg.de}

}

Contact

For questions, please contact hot@tue.mpg.de.

For commercial licensing, please contact ps-licensing@tue.mpg.de.